Thread and Processes

Goals

- What threads are and what they are not

- Why threads are useful

- How to write a program using threads

- Alternatives to using threads

Recap: Threads and Process

- Thread: Execution Context

- Fully describes program state

- Program Counter, Registers, Execution Flags, Stack

- Address space (with or w/o translation)

- Set of memory addresses accessible to program (for read or write)

- May be distinct from memory space of the physical machine(in which case programs operate in a virtual address space)

- Process: an instance of a running program

- An execution environment with restricted rights

- One or more threads executing in a (protected) Address Space

- Encapsulates one or more threads sharing process resource

- Owns memory (mapped pages), file descriptors, network connections, file system context…

- In modern OS, anything that runs outside the kernel runs in a process

- Dual mode operation / Protection

- Only the “system” has the ability to access certain resource

- Combined with translation, isolates programs from each other and the OS from programs

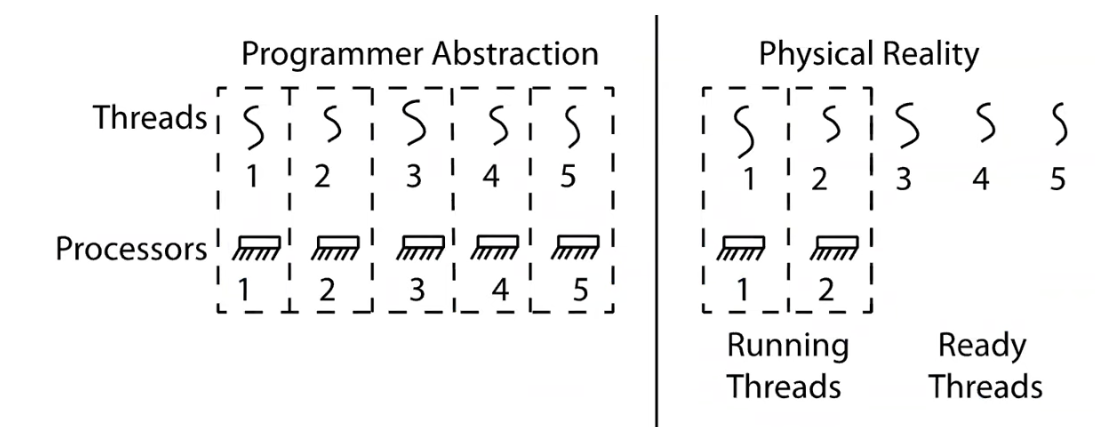

Recap: Illusion of Multiple Processors

- Threads are virtual cores

- Multiple threads - Multiplex hardware in time

- A thread is executing on a processor when it is resident in that processor’s registers

- Each virtual core(thread) has:

- Program counter (PC), stack pointer(SP)

- Registers - both integer and floating point

- Where is the thread?

- On the real(physical) core or

- Saved in chunk of memory - called the Thread Control Block (TCB)

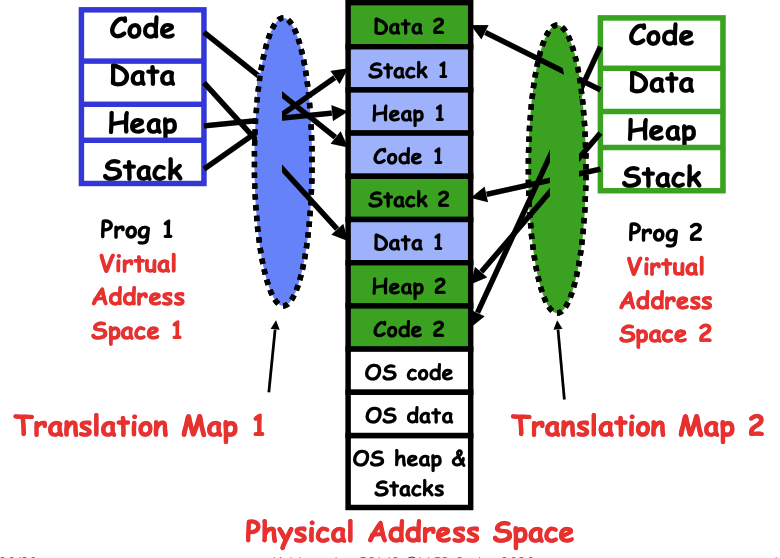

Recap: Memory Address Translation through Page Table

Note that the translation map guarantees that each process maps their address spaces to different locations in the physical memory, preventing one process accessing the memory from the other process.

Threads

Motivation for Threads

- Operating System must handle multiple things at once (

MTAO) - Processes, interrupts, background system maintenance

- Networked servers must handle MTAO

- Multiple connections handled simultaneously

- Parallel programs must handle MTAO

- To achieve better performance

- Programs with user interface often must handle MTAO

- To achieve user responsiveness while doing computation

- Network and disk bound programs must handle MTAO

- To hide network/disk latency

- Sequence steps in access or communication

Concurrency is not Parallelism

- Concurrency is about handling multiple things at once

- Parallelism is about doing multiple things simultaneously (usually with multiple CPU cores)

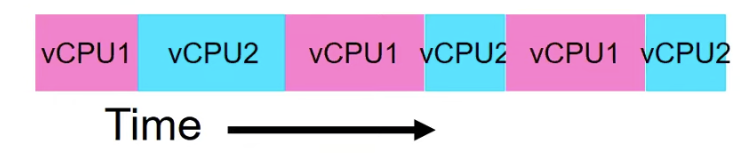

- Two threads on a single-core system

- execute concurrently, but not in parallel

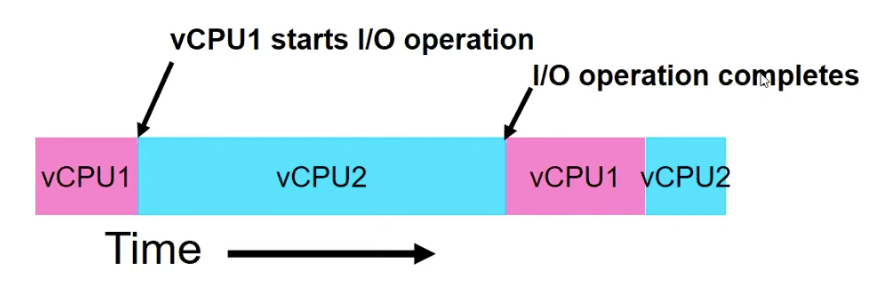

Threads Mask I/O Latency

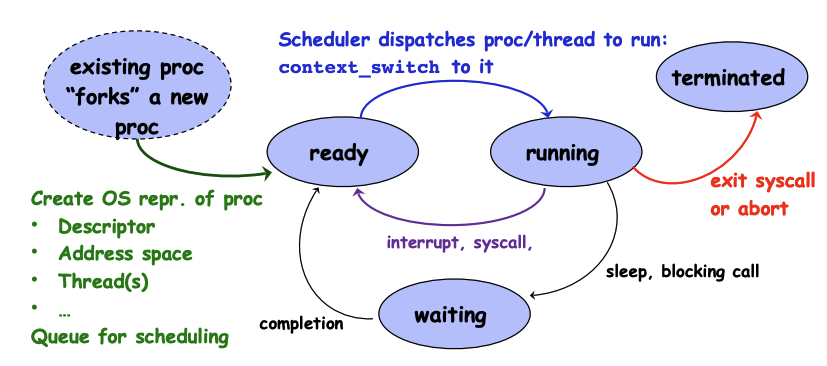

A thread is in one of the following three states:

RUNNINGREADY- eligible to run, but not currently runningBLOCKED- ineligible to run

If a thread is waiting for an I/O to finish, the OS marks it as BLOCKED. Once the I/O finally finishes, the OS marks it as READY.

The OS(scheduler) maintains a ready queue for context switching. If there are no threads perform I/O, threads are put into the ready queue for execution:

If there is a thread performing a blocking I/O operation, the schedule will put that thread into a wait queue associated with I/O, once it finishes, the scheduler will move it back to the wait queue.

The blue thread in the above picture runs without interruption because the pink thread is doing the I/O operation and is removed from the READY queue, so there is no context switching during that time.

pthreads

int pthread_create(

pthread_t* tidp,

const pthread_attr_t* attr,

void *(*start_routine)(void *),

void * arg);

- thread is created executing

start_routinewithargas its sole argument - return is implicit call to

pthread_exit

void pthread_exit(void *value_ptr);

- terminates the thread and makes

value_ptravailable to any successful join

int pthread_join(pthread_t thread, void **value_ptr);

- Suspends execution of the calling thread until the target

threadterminates - On return with a non-NULL

value_ptrthe value passed topthread_exist()by the terminating thread is made available in the location referenced byvalue_ptr.

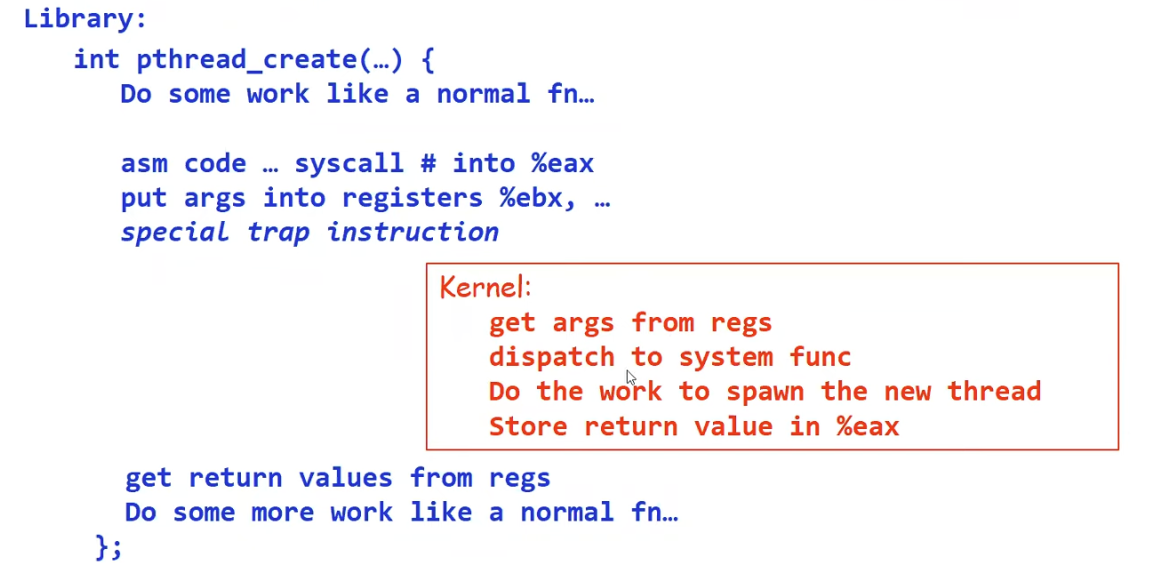

What happens when pthread_create(...) is called in a process?

pthread_create is just a C function that has special assembly code in it. The assembly code helps set up the registers in a way the kernel is going to recognize. And then it executes a special trap instruction, which is a way to jump into the kernel (think of it as an error). This will let us transition out of user mode into kernel mode due to the exception (trap).

Once we jump into the kernel, the kernel knows that this is the system call for creating a thread. It then gets the arguments, does the creation of the thread and returns the pointer (there is a special register to store return value).

Now we are back to the user mode. We grab the return value from the registers and do the rest of the work. This pthread_create function is not a normal function. It wraps the system call internally, but from users perspective, it just looks like a library C function.

It’s worth noting that the system call can take thousands of cycles. The OS has to store a bunch of registers when going to the kernel and come out again. The translation from user mode to kernel mode is not cheap.

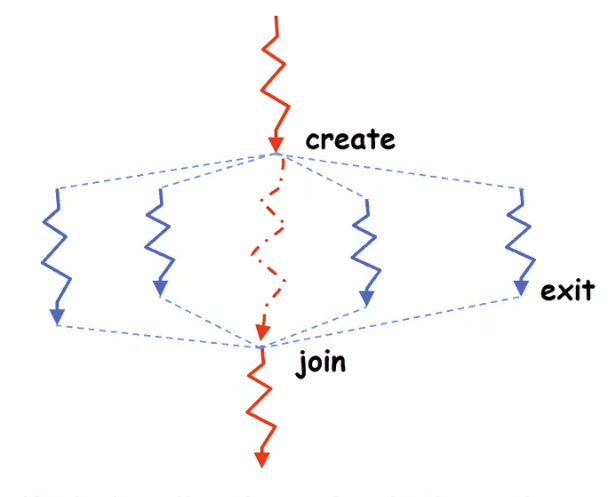

fork join

- Main thread creates(forks) collection of sub-threads passing them args to work on

- … and then joins with them, collection results

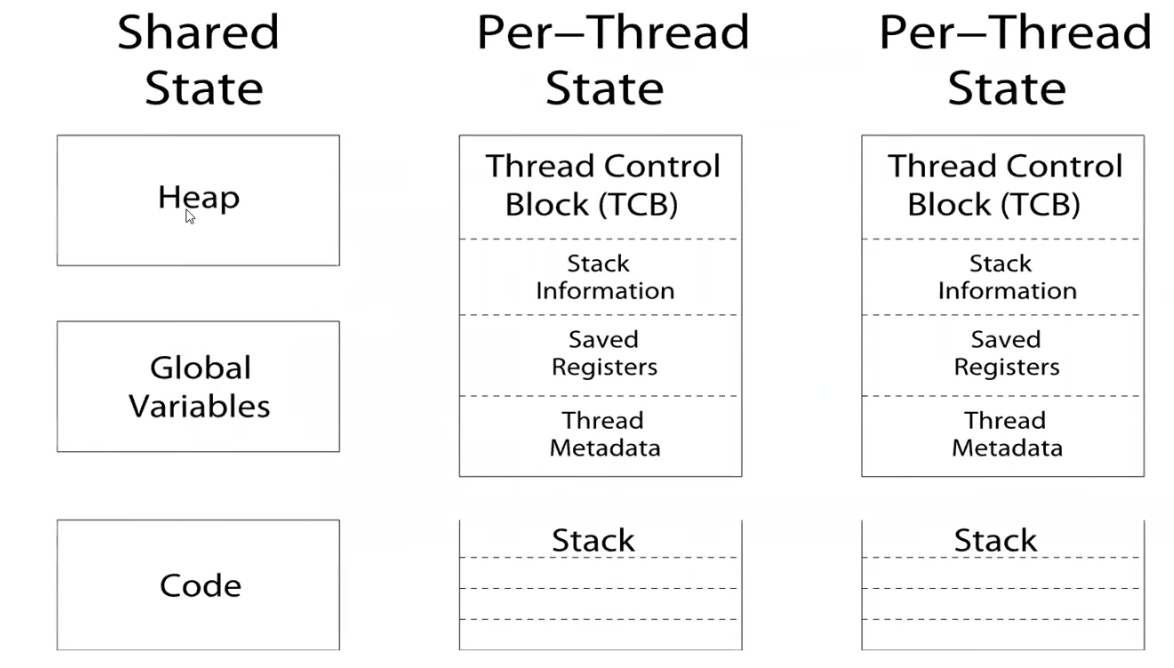

Thread states

- State shared by all threads in process/address space

- Content of memory (global variables, heap)

- I/O state(file descriptors, network connections, etc)

- State “private” to each thread

- Kept in TCB (Thread Control Block)

- CPU registers(including, program counter)

- Execution stack

- Parameters, temporary vars

- Return PCs are kept while called procedures are executing

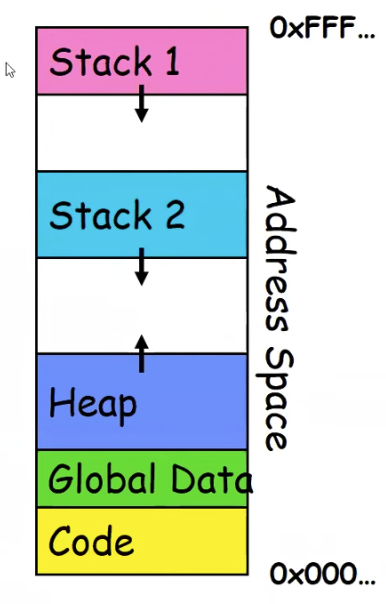

Execution Stacks

- Two sets of CPU registers

- Two sets of stacks

- Issues:

- How do we position stacks relative to each other?

- What maximum size should we choose for the stacks?

- What happens if threads violate this?

- How might you catch violations?

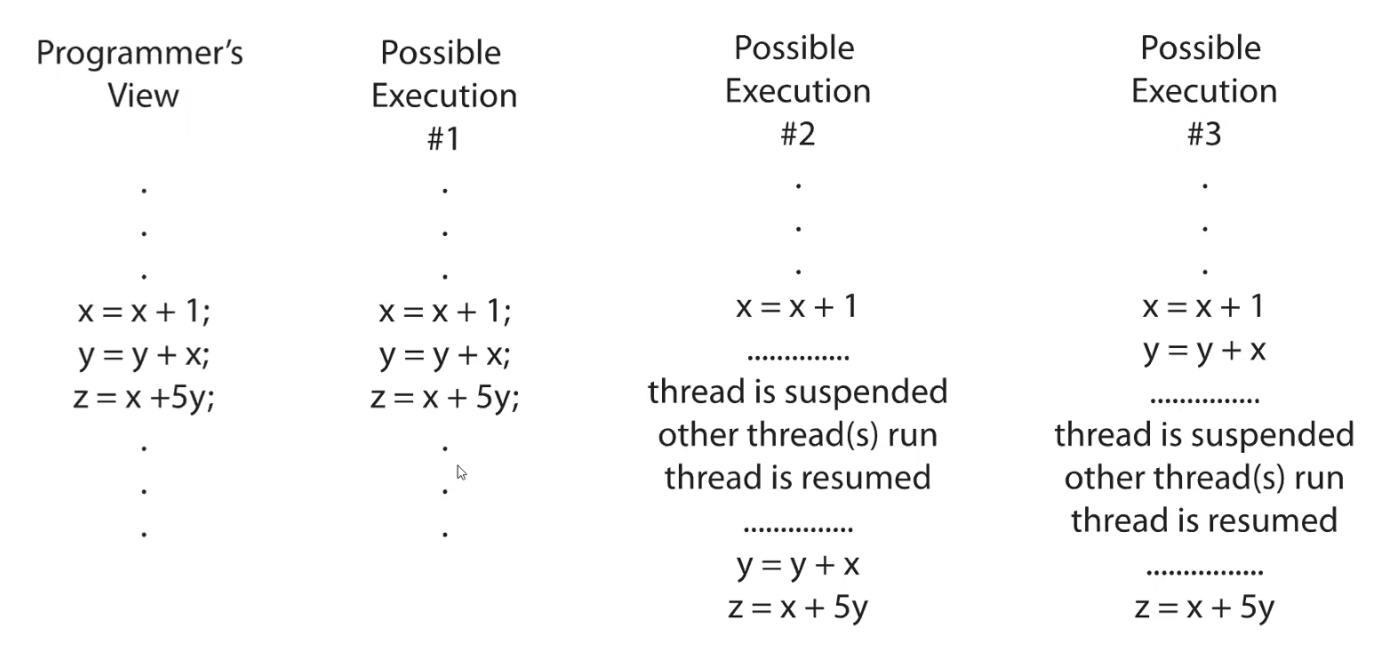

Thread Execution and Race Condition

- Illusion: Infinite number of processors

- Reality: Threads execute with variable “speed”

- Programs must be designed to work with any schedule

- Non-determinism:

- Scheduler can run threads in any order

- Scheduler can switch threads at any time

- This can make testing very difficult

- Independent threads

- No state shared with other threads

- Deterministic, reproducible conditions

- Synchronization:

- Coordination among threads, usually regarding shared data

- Mutual Exclusion:

- Ensuring only one thread does a particular thing at a time (one thread excludes the others)

- A type of synchronization

- Critical Section:

- Code exactly one thread can execute at once

- Result of mutual exclusion

- Lock

- An object only one thread can hold at a time

- Provides mutual exclusion

- Locks provide two atomic operations

lock.lock()- wait until lock is free; then mark it as busy. If the lock is being hold by other threads, the current thread that tries to acquire it will be put tosleep.lock.unlock()- mark lock as free- should only be called by a thread that currently holds the lock

- Semaphore:

- A kind of generalized lock

- First defined by Dijkstra in late 60s

- Main synchronization primitive used in original UNIX

- A Semaphore has a non-negative integer value and supports the following two operations:

P() or down(): atomic operation that waits for semaphore to become positive, then decrements it by 1V() or up(): an atomic operation that increments the semaphore by 1, waiting up a waitingP, if any

Two patterns of using Semaphores

- mutual exclusion(like lock), also called a “binary semaphore” or “mutex”

// the initial value of semaphore = 1;

// all the subsequence threads that try to access the code (decrement the semaphore)

// will be put at `sleep()`

semaphore.down();

// critical section goes here

semaphore.up();

- Signaling other threads, e.g. ThreadJoin

// the initial value of semaphore = 0;

// in the main thread

ThreadJoin {

semaphore.down(); // <----

} // |

// |

// in another thread // |

ThreadFinish { // |

semaphore.up(); //-------

}

Processes

- How to manage process state?

- How to create a process?

- How to exit from a process?

- If processes are created by other processes, how does the first process start?

- First process is started by the kernel

- Often configured as an argument to the kernel before the kernel boots

- Often called the

"init"process

- After this, all processes on the system are created by other processes

- First process is started by the kernel

Kernel APIs

Every process react to a bunch of signals

exit- terminate a process- The

exist(0)function

- The

fork- copy the current process- State of the original process duplicated in the child process

- Address Space (memory), File descriptors, etc,…

- State of the original process duplicated in the child process

exec- change the program being run by the current processwait- wait for a process to finish

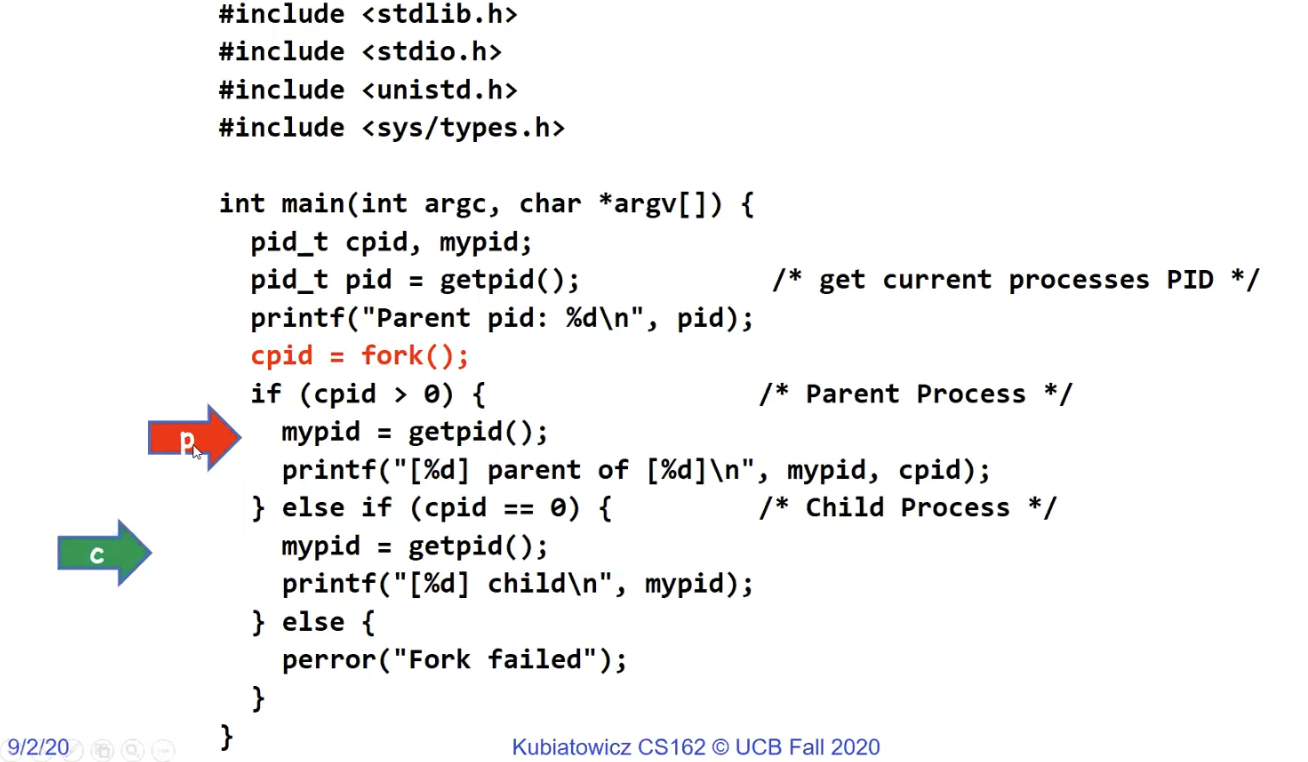

fork

pid_t fork()- copy the current process- new process has different pid

- new process contains a single thread

- Return value from

fork()- when

>0:- running in parent process

- return value is pid of new child process

- when

=0:- running in new child process

- when

<0:- Error, must handle

- Running in the original process

- when

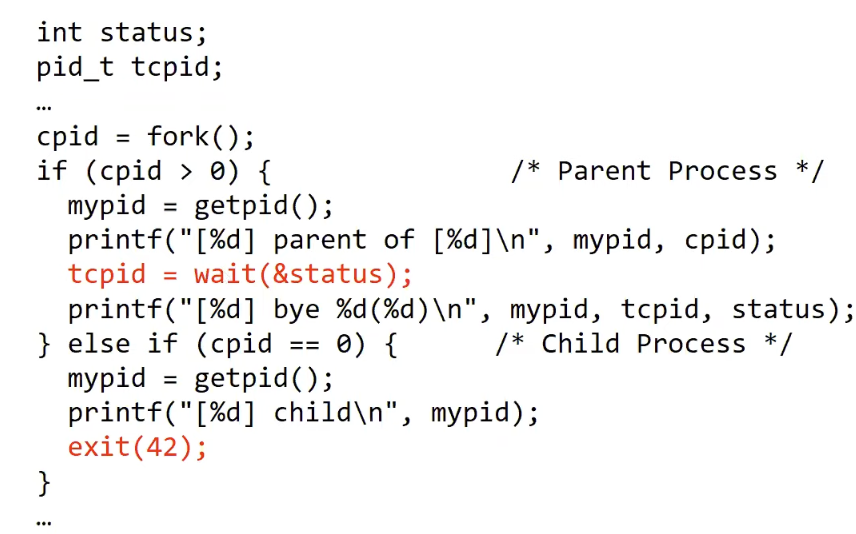

After fork() is called. The code after that will be executed by two processes at the same time - parent and child. This is because child inherits all the information from the parent, including the executing context of the current thread that is calling the fork(). Depending on the return value (cpid), we know if the current process is parent or child. In the above example, the red arrow points to the parent process. The green arrow points to the child process.

Don’t call fork() in a multithreaded process. The other threads(the ones that didn’t call fork()) just vanish.

- What if one of these threads was holding a lock

- What if one of these threads was in the middle of modifying a data structure

- No cleanup happens!

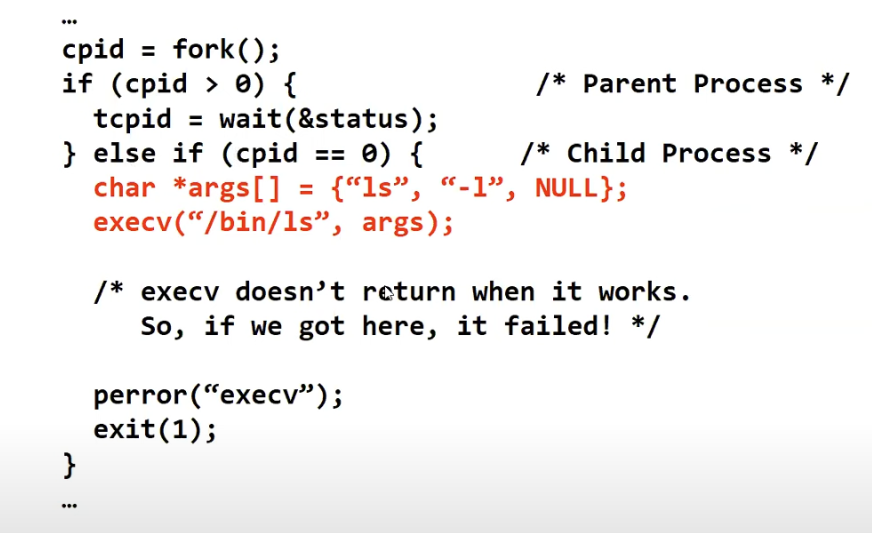

exec

If we want the child process to execute something different, we can use the execfunction

In this case, the child process will immediately execute ls -al once it’s created. It is safe to call exec() in the child process. It just replaces the entire address space.

wait

The wait function waits for the child process to finish. In the above example, once the child process exits with the status code 42, the parent process will continue to get the pid from the process and continue the execution.

POSIX Signals

kill- send a signal (interrupt-like notification) to another processsigaction- set handlers for signals

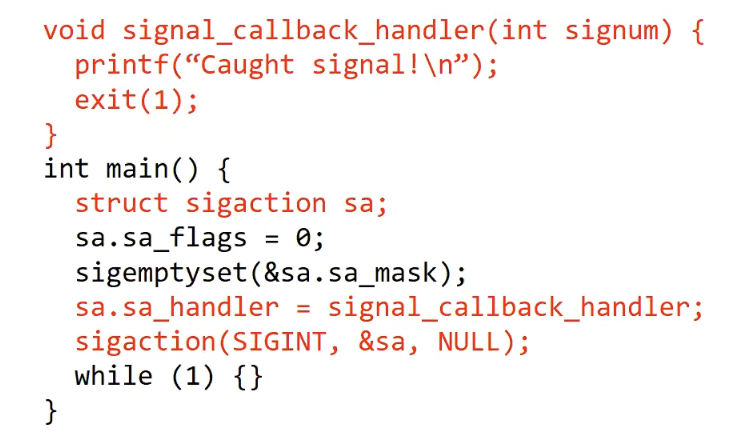

The sigaction function allows you to add a handler in the user level space to capture signals thrown from the OS level. For each signal, there is a default handler defined by the system.

In the code above, we have an infinite while loop. The process won’t exit until receives a SIGINT signal. The SIGINT can be triggered using ctrl-c from keyboard, which is going to terminate the process.

- Common POSIX Signals

- SIGINT: ctrl-c

- SIGTERM: default for the

killshell command - SIGSTP: ctr-z (default action: stop process)

- SIGKILL/SIGSTOP: – terminate/stop process

- Can’t be changed or disabled with

sigaction

- Can’t be changed or disabled with

Summary

- Threads are the OS unit concurrency

- Abstraction of a virtual CPU core

- Can use

pthread_create, etc, to manage threads within a process - They share data -> needs synchronization to avoid data races

- Processes consist of one or more threads in an address space

- Abstraction of the machine: execution environment for a program

- Can use

fork, exec, etc to manage threads within a process